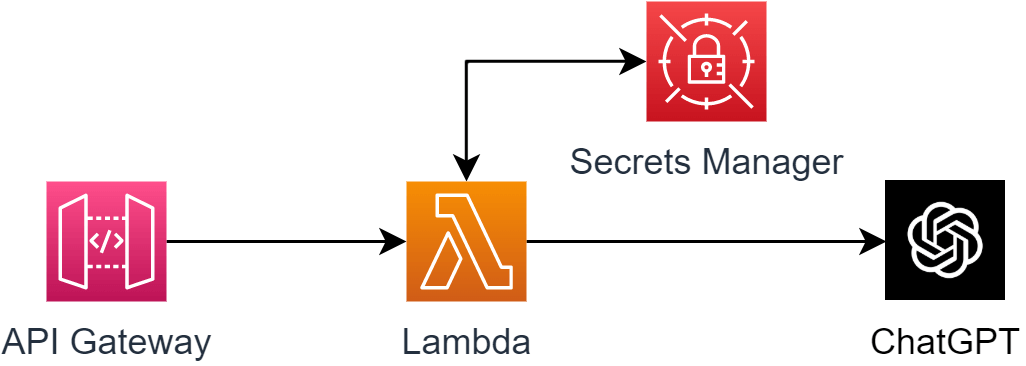

This guide will walk you through setting up and implementing ChatGPT with AWS Lambda and AWS API Gateway. ChatGPT is a powerful language model developed by OpenAI.

We will start by providing an overview of ChatGPT and its capabilities. Followed by a detailed explanation of how to set up and configure an AWS environment for running ChatGPT. We will then walk you through integrating ChatGPT into your application.

As an example setup, I want to create an API, which lets ChatGPT generate a random quote from a famous person.

Table of content

📐 Setup ChatGPT API Keys

🔨 Create Lambda function

🔩 Connect API Gateway to Lambda

💭 Conclusion

📐 Setup ChatGPT API Keys

ChatGPT has the ability to generate human-like text. It is a valuable tool for various applications such as chatbots, language translation, and more. It can be used for many use cases, like sentiment analysis, text completion, code generation, and much more. In our case, we want to use it to generate simple quotes.

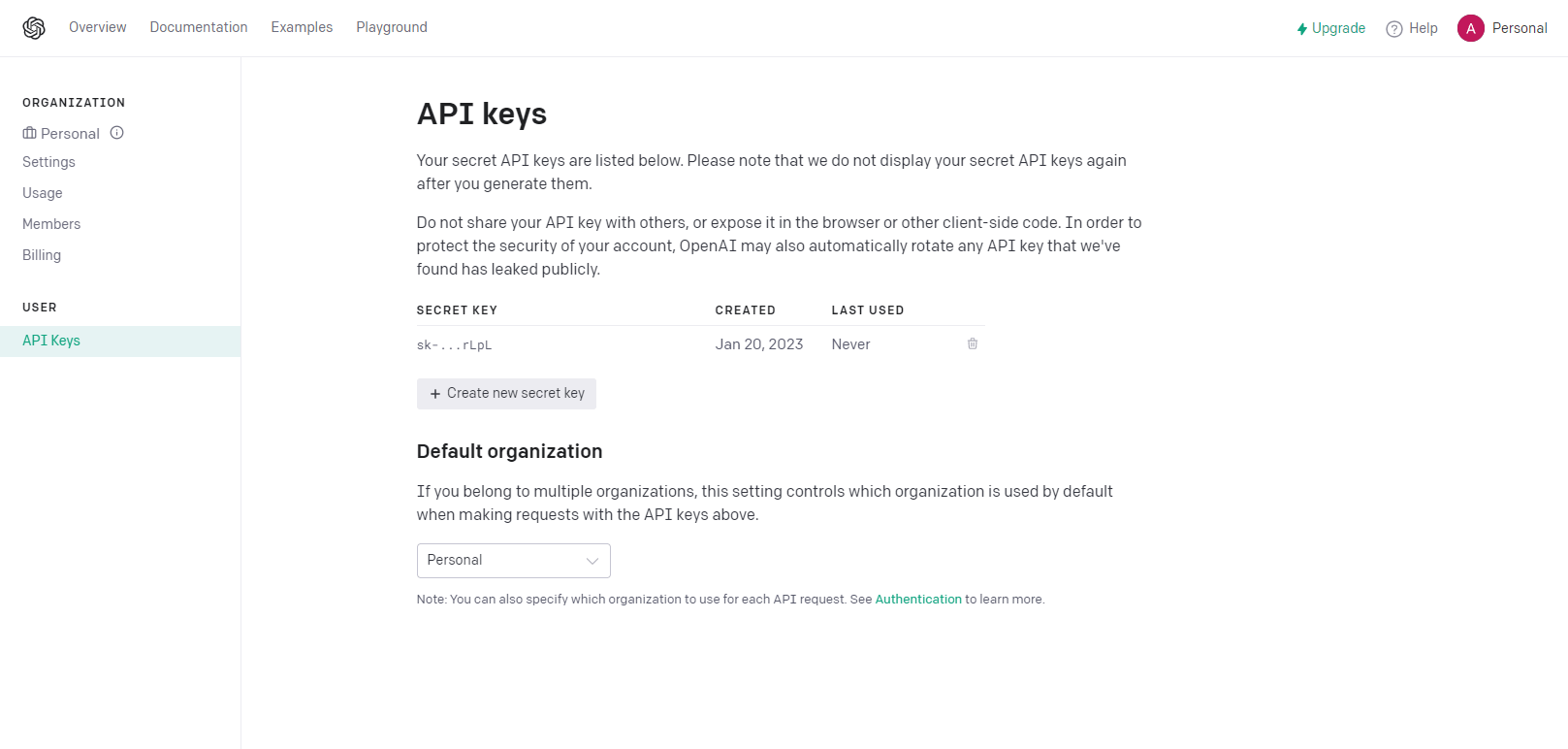

To set up the ChatGPT API keys, you need to create an account and go to your account page: https://beta.openai.com/account/api-keys

There we can generate a new API key. This key is required in the next step for the setup within AWS.

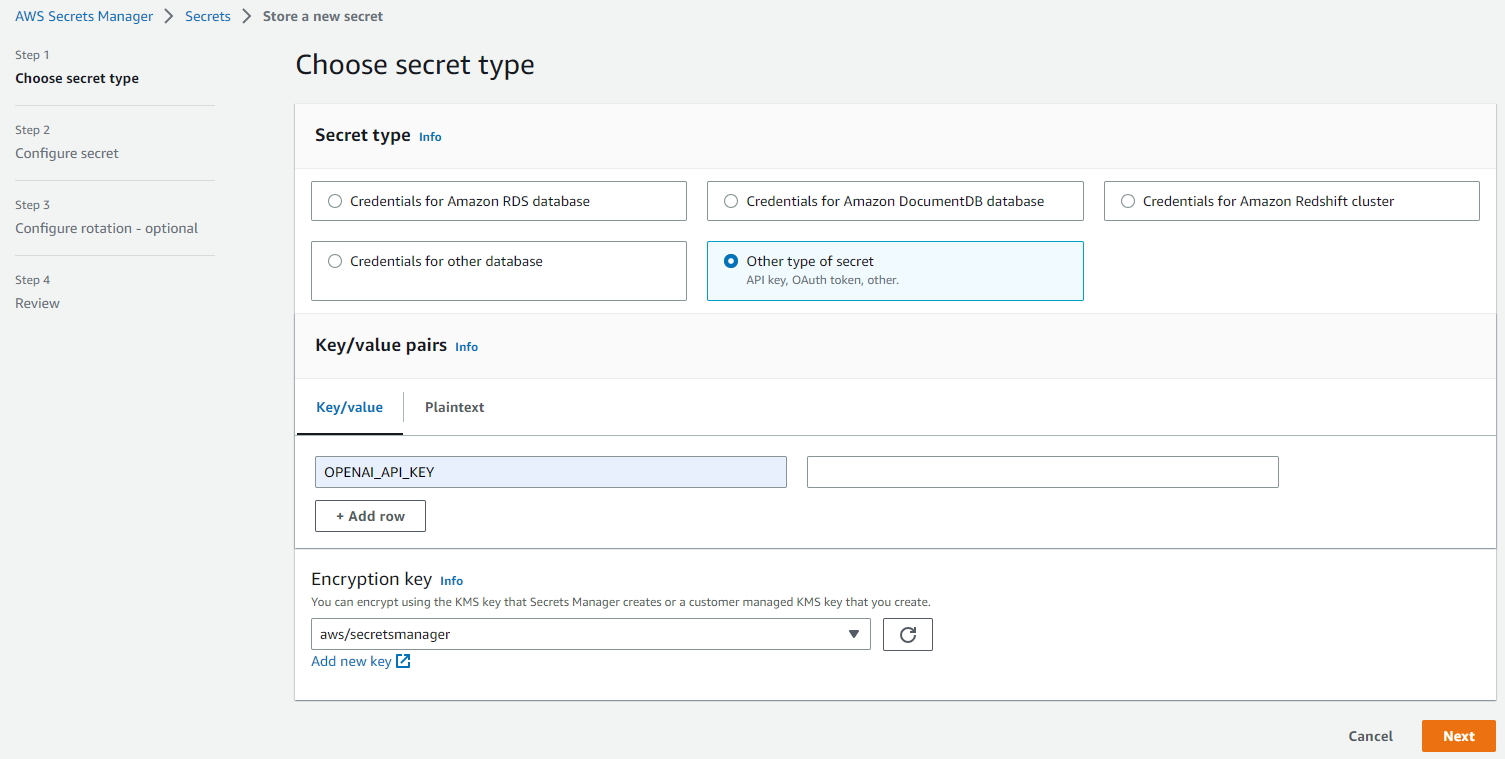

Afterward, we can add the API key to the AWS Secrets Manager. Our key is securely stored there. This way we will not disclose any clear text API keys in our Lambda function later. I always recommend using the AWS Secrets Manager, when dealing with all kinds of secrets. It rotates, manages, and retrieves secrets throughout its lifecycle.

🔨 Create Lambda function

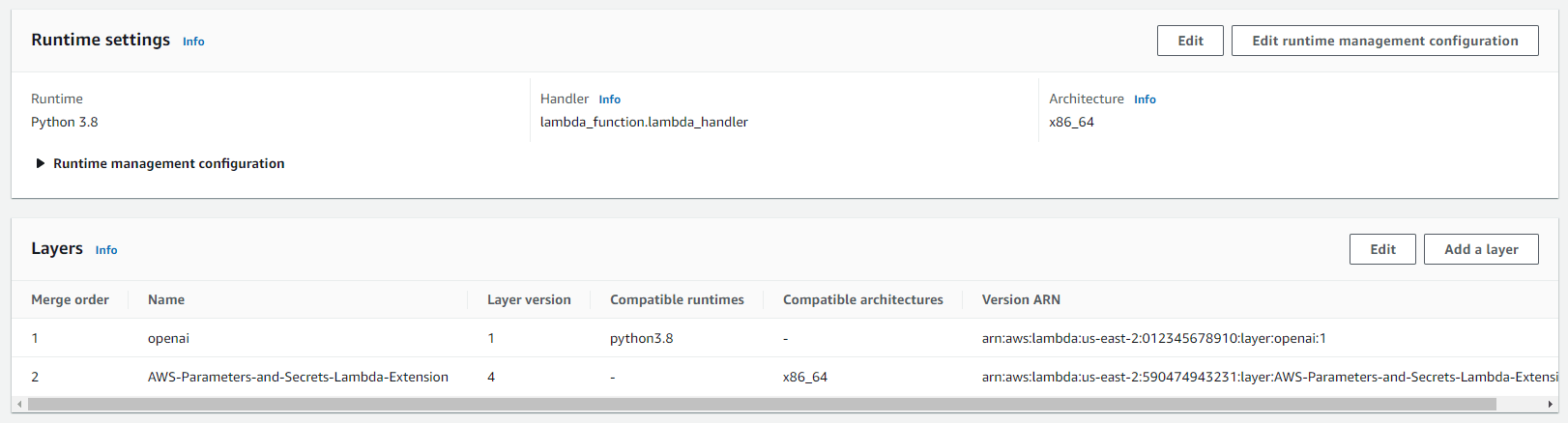

We create a new Lambda function based on Python 3.8. As we want to use the AWS Secrets Manager, we need to add a new layer. I have already written an article about the new Lambda Secrets Manager extension. For more details check it out:

Next, we need to integrate the OpenAI module for python. This is not available by default within Lambda. We need to create a new custom layer for this. I have also written a detailed guide, on how to create custom layers. You can follow this guide and install the following module pip install openai:

Afterward, you should see two layers:

With all this information we can develop our code:

import os

import openai

import urllib3

import json

import re

def lambda_handler(event, context):

secret_id = "OPENAI"

secret_name = "OPENAI_API_KEY"

auth_headers = {"X-Aws-Parameters-Secrets-Token": os.environ.get('AWS_SESSION_TOKEN')}

http = urllib3.PoolManager()

r = http.request("GET", "http://localhost:2773/secretsmanager/get?secretId=" + secret_id, headers=auth_headers)

parameter = json.loads(r.data)

OPENAI_API_KEY = json.loads(parameter["SecretString"])[secret_name]

openai.api_key = OPENAI_API_KEY

response = openai.Completion.create(

model="text-davinci-003",

prompt="Get a random motivational quote",

temperature=0.9,

max_tokens=150

)

quote = response["choices"][0]["text"]

return (quote)This code will first retrieve the OpenAPI API key from the AWS Secrets Manager. Then it will call the ChatGPT API and asks for a motivational quote and returns it to the user. For the full documentation of the ChatGPT module, you can refer to the official documentation here.

It is advisable to increase the lambda function timeout to several seconds, as ChatGPT can take some time to generate the response. You can adjust this setting in the Lambda configuration tab.

Make sure that the Lambda policy is allowed to retrieve the API key from the AWS Secrets Manager and decrypt it:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"secretsmanager:GetSecretValue",

"kms:Decrypt"

],

"Resource": [

"arn:aws:secretsmanager:<region>:<accountID>:secret:<secretName>",

"arn:aws:kms:<region>:<accountID>:key/<keyID>"

]

}

]

}🔩 Connect API Gateway to Lambda

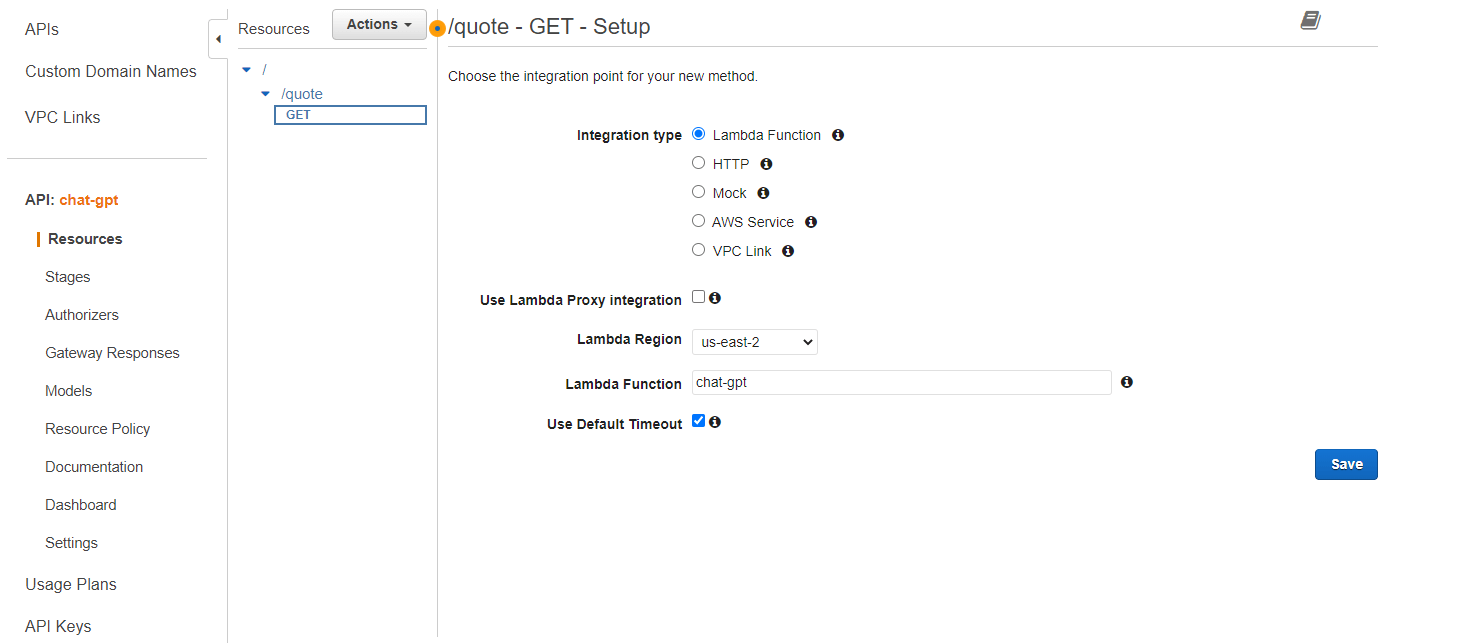

We want to be able to retrieve the motivational quote from an API, so we could integrate it into other applications. Let us create a new API Gateway API for this case. As the resource, we create a new endpoint called quote and connect it with our existing Lambda function. In our case, we don't need to use the Lambda Proxy Integration.

That's the whole setup to enable ChatGPT via our own API. Next, we save and deploy it. You can directly deploy the API from the Actions tab in the Resources section. Afterward, we invoke the API and will retrieve our quote.

$ curl -s https://execute-api.us-east-2.amazonaws.com/Test/quote

"\n\n\"If you can't fly then run, if you can't run then walk, if you can't walk then crawl, but whatever you do you have to keep moving forward.\" \u2015 Martin Luther King Jr."💭 Conclusion

This guide has provided a detailed step-by-step approach for implementing ChatGPT on AWS. We have explained the capabilities of ChatGPT and how it can be integrated. Furthermore, we have provided an example of a use case for ChatGPT on AWS.

By following this guide, you should now have a solid understanding of how to securely implement ChatGPT on AWS. And be able to use its powerful capabilities to enhance your business processes. Now it is your turn to implement ChatGPT in your applications.

Member discussion