Introduction

In my daily work managing cloud infrastructure, I often faced the challenge of efficiently configuring services and running commands across many machines. With numerous EC2 instances running different applications, it was important to ensure consistent security settings and configurations. However, manually logging into each instance, executing commands, and checking configurations was not only time-consuming but also prone to mistakes.

I remember a project where we needed to apply a standard security hardening process across our fleet. Each instance required specific configurations - like turning off unused services, applying security patches, and setting up logging practices. The traditional way of handling these tasks individually for every instance became overwhelming, especially as our infrastructure grew.

After some digging I found AWS Systems Manager (SSM) documents. With SSM, I was able to create automation documents that defined the specific actions needed for hardening our instances. I could roll out security configurations in a consistent way across all machines, ensuring that no instance was left vulnerable or misconfigured.

The best part of SSM was its ability to centralize management tasks. I could write a single document that included all the hardening steps—such as installing necessary security tools, configuring firewall rules, and applying operating system updates - and execute it across the fleet with just a few clicks. This not only saved a lot of time but also ensured that our security was the same on all instances.

Using SSM also meant that I could easily update the configuration in the document and redeploy it as our security policies changed. This capability transformed my approach to cloud management, making what was once a difficult challenge into a smoother process that improved our security and compliance efforts overall.

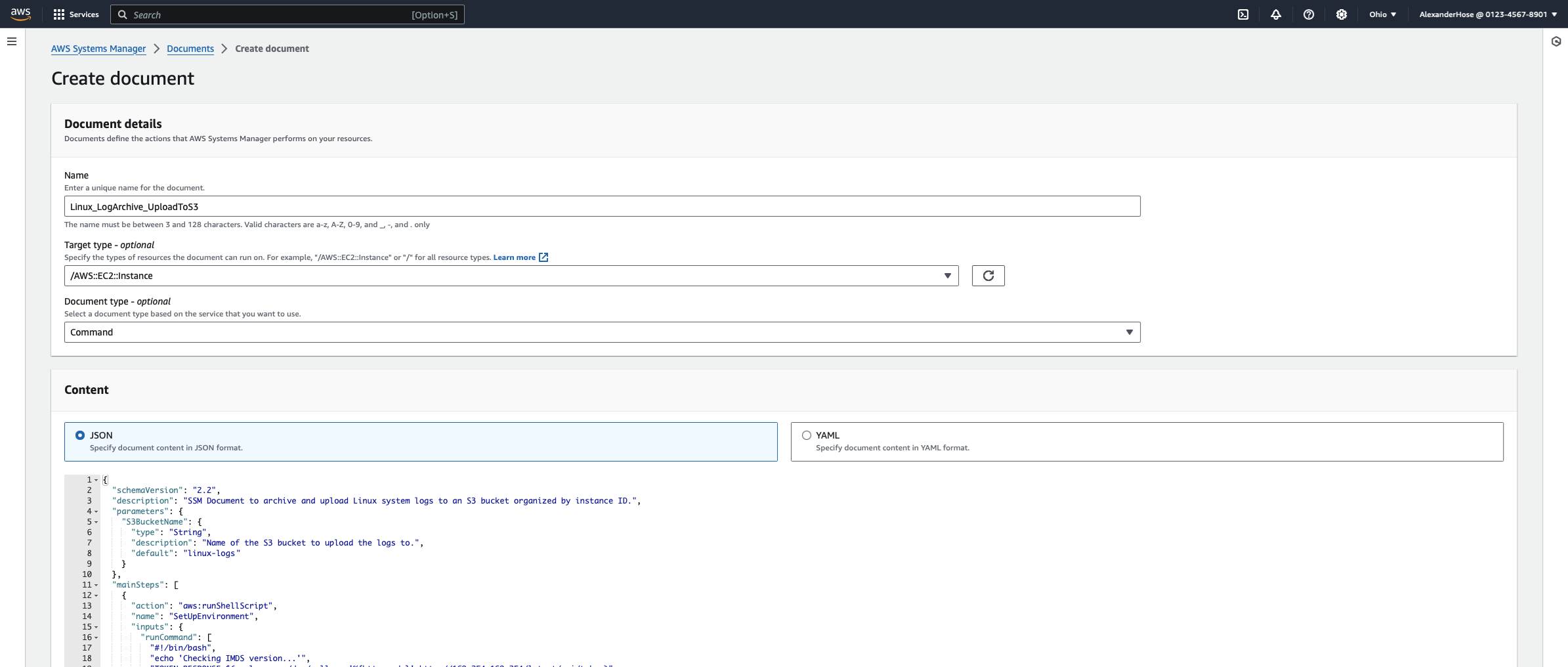

An Example of Streamlining Log Management

Let’s take an example and consider the task of uploading logs from the /var/log directory to a centralized S3 bucket. This is just one way SSM can help simplify tasks and improve efficiency in managing cloud resources. We’ll explore the script together, breaking it down into separate steps to understand how each part contributes to the overall process.

Defining the Document Structure and Parameters

The beginning section of the script sets up essential information and parameters that guide how the SSM document operates. It includes the schema version, which ensures compatibility with AWS Systems Manager's features, and a description, which briefly outlines the purpose of the script. Additionally, this part defines a parameter for the S3 bucket name, allowing customization by specifying which S3 bucket to upload logs to. The default bucket name can be overridden, making it easy to reuse the script across different environments without needing changes in the code itself.

{

"schemaVersion": "2.2",

"description": "SSM Document to archive and upload Linux system logs to an S3 bucket organized by instance ID.",

"parameters": {

"S3BucketName": {

"type": "String",

"description": "Name of the S3 bucket to upload the logs to.",

"default": "linux-logs-0123456789"

}

}

}Set Up Environment and Fetch Metadata

The script starts by checking the Instance Metadata Service (IMDS) version to determine how to retrieve the instance ID. If IMDSv2 is enabled, it uses a token; otherwise, it uses the traditional method without a token. It then retrieves the instance ID and the current timestamp, storing them in a temporary JSON file (/tmp/log_metadata.json). This file is crucial so we can use the information in the following steps.

{

"action": "aws:runShellScript",

"name": "SetUpEnvironment",

"inputs": {

"runCommand": [

"#!/bin/bash",

"echo 'Checking IMDS version...'",

"TOKEN_RESPONSE=$(curl -s -o /dev/null -w '%{http_code}' http://169.254.169.254/latest/api/token)",

"if [ \"$TOKEN_RESPONSE\" -eq 405 ]; then",

" echo 'IMDSv2 is being used, obtaining instance ID with token...'",

" TOKEN=$(curl -s -X PUT -H 'X-aws-ec2-metadata-token-ttl-seconds: 21600' http://169.254.169.254/latest/api/token)",

" INSTANCE_ID=$(curl -s -H \"X-aws-ec2-metadata-token: $TOKEN\" http://169.254.169.254/latest/meta-data/instance-id)",

"else",

" echo 'IMDSv1 is being used, obtaining instance ID without token...'",

" INSTANCE_ID=$(curl -s http://169.254.169.254/latest/meta-data/instance-id)",

"fi",

"DATE_TIME=$(date +'%Y-%m-%d_%H-%M-%S')",

"echo \"Instance ID: $INSTANCE_ID\"",

"echo \"Timestamp: $DATE_TIME\"",

"echo '{\"InstanceId\":\"'$INSTANCE_ID'\",\"Timestamp\":\"'$DATE_TIME'\"}' > /tmp/log_metadata.json"

]

}

}

How It Works:

- It uses

curlto check the IMDS version by sending a request to the token endpoint. If it receives a 405 error, it means IMDSv2 is active. - Based on the IMDS version, it either fetches the instance ID using a token (for IMDSv2) or directly (for IMDSv1).

- It records the current date and time in a specific format.

- Finally, it creates a JSON file

/tmp/log_metadata.jsonthat stores the instance ID and timestamp.

Check for and Install AWS CLI

The next step checks if the AWS Command Line Interface (CLI) is installed. If not found, the script identifies the Linux distribution and installs the appropriate version of the AWS CLI. This ensures that the machine can interact with AWS services.

{

"action": "aws:runShellScript",

"name": "CheckAndInstallAWSCLI",

"inputs": {

"runCommand": [

"#!/bin/bash",

"if ! command -v aws &> /dev/null; then",

" echo 'AWS CLI not found, installing...'",

" if [ -f /etc/os-release ] && grep -qi 'amazon linux' /etc/os-release; then",

" yum install -y aws-cli",

" elif [ -f /etc/os-release ] && grep -qi 'ubuntu' /etc/os-release; then",

" apt update && apt install -y awscli",

" else",

" echo 'Unsupported Linux distribution. Exiting.'",

" exit 1",

" fi",

"else",

" echo 'AWS CLI already installed.'",

"fi"

]

}

}

How It Works:

- The script checks for the AWS CLI using the

command -vcommand. If it is not found, it installs the appropriate package based on the Linux distribution. - If the OS is Amazon Linux, it uses

yum, and for Ubuntu, it usesapt. - If the distribution is unsupported, the script exits with an error.

Archive Logs

The script checks if the /var/log directory exists and contains files. If logs are present, it creates a compressed archive of all the log files in that directory. The archive is named using the timestamp obtained earlier, ensuring that each archive is uniquely identifiable. If the directory is empty, the script will exit and inform the user that there are no logs to archive.

{

"action": "aws:runShellScript",

"name": "ArchiveLogs",

"inputs": {

"runCommand": [

"#!/bin/bash",

"if [ -d /var/log ] && [ \"$(ls -A /var/log)\" ]; then",

" echo 'Archiving logs in /var/log...'",

" tar -czf /tmp/logs_$(jq -r '.Timestamp' /tmp/log_metadata.json).tar.gz -C /var/log . 2>/dev/null || true",

"else",

" echo 'No logs found in /var/log to archive.'",

" exit 1",

"fi"

]

}

}

How It Works:

- It checks if the

/var/logdirectory exists and contains files. - If logs are present, it creates a compressed tar archive of the logs and saves it in

/tmp, naming the file with the current timestamp. - The

tarcommand suppresses any error messages, ensuring that the script doesn’t exit unexpectedly if an error occurs (thanks to|| true).

Upload Archive to S3

After creating the archive, the script reads the instance ID and timestamp from the JSON file to construct the path for uploading to S3. It attempts to upload the archive to a specified S3 bucket, organizing it by instance ID and timestamp in the S3 path. If the archive file does not exist, the script lists the contents of the /tmp directory to aid in troubleshooting and exits gracefully.

{

"action": "aws:runShellScript",

"name": "UploadToS3",

"inputs": {

"runCommand": [

"#!/bin/bash",

"INSTANCE_ID=$(jq -r '.InstanceId' /tmp/log_metadata.json)",

"DATE_TIME=$(jq -r '.Timestamp' /tmp/log_metadata.json)",

"ARCHIVE_FILE=/tmp/logs_$DATE_TIME.tar.gz",

"if [ -f \"$ARCHIVE_FILE\" ]; then",

" echo 'Uploading archive to S3 bucket...'",

" aws s3 cp \"$ARCHIVE_FILE\" s3://{{S3BucketName}}/logs/$INSTANCE_ID/logs_$DATE_TIME.tar.gz",

"else",

" echo 'Archive file does not exist. Listing files in /tmp directory...'",

" ls -l /tmp/",

" exit 1",

"fi"

]

}

}

How It Works:

- It reads the instance ID and timestamp from the metadata JSON file.

- It constructs the path to the archive file created in the previous step.

- If the archive file exists, it uploads it to the specified S3 bucket, organizing it by instance ID and timestamp.

- If the archive file does not exist, it lists the files in the

/tmpdirectory for troubleshooting.

Cleanup Temporary Files

Finally, the script cleans up by deleting the archive file and the temporary JSON file. This helps free up space and keeps the instance tidy after the task is completed.

{

"action": "aws:runShellScript",

"name": "Cleanup",

"inputs": {

"runCommand": [

"#!/bin/bash",

"echo 'Cleaning up local archive...'",

"rm -f /tmp/logs_$(jq -r '.Timestamp' /tmp/log_metadata.json).tar.gz",

"rm -f /tmp/log_metadata.json"

]

}

}

How It Works:

- It removes the archive file and the metadata JSON file from the

/tmpdirectory to free up space and maintain cleanliness.

Conclusion

This setup is lightweight and flexible, making it a useful tool for handling log management and similar tasks across multiple instances. It’s easy to extend, too - integrating it with AWS Lambda, for example, could allow for automatic triggers when certain events occur, helping automate routine maintenance or monitoring tasks even further.

However, there are some limitations worth noting. Unlike the aws:executeAwsApi action, which enables direct queries to AWS APIs, this approach requires workarounds for interacting with AWS services. Additionally, there’s no native method for passing variables between steps, which can make complex workflows trickier to implement. Despite these limitations, SSM documents remain a powerful and adaptable tool for lightweight automation, enabling streamlined operations and consistency across your AWS fleet.

Full Code

Below is the complete code for the SSM document.

{

"schemaVersion": "2.2",

"description": "SSM Document to archive and upload Linux system logs to an S3 bucket organized by instance ID.",

"parameters": {

"S3BucketName": {

"type": "String",

"description": "Name of the S3 bucket to upload the logs to.",

"default": "linux-logs-0123456789"

}

},

"mainSteps": [

{

"action": "aws:runShellScript",

"name": "SetUpEnvironment",

"inputs": {

"runCommand": [

"#!/bin/bash",

"echo 'Checking IMDS version...'",

"TOKEN_RESPONSE=$(curl -s -o /dev/null -w '%{http_code}' http://169.254.169.254/latest/api/token)",

"if [ \"$TOKEN_RESPONSE\" -eq 405 ]; then",

" echo 'IMDSv2 is being used, obtaining instance ID with token...'",

" TOKEN=$(curl -s -X PUT -H 'X-aws-ec2-metadata-token-ttl-seconds: 21600' http://169.254.169.254/latest/api/token)",

" INSTANCE_ID=$(curl -s -H \"X-aws-ec2-metadata-token: $TOKEN\" http://169.254.169.254/latest/meta-data/instance-id)",

"else",

" echo 'IMDSv1 is being used, obtaining instance ID without token...'",

" INSTANCE_ID=$(curl -s http://169.254.169.254/latest/meta-data/instance-id)",

"fi",

"DATE_TIME=$(date +'%Y-%m-%d_%H-%M-%S')",

"echo \"Instance ID: $INSTANCE_ID\"",

"echo \"Timestamp: $DATE_TIME\"",

"echo '{\"InstanceId\":\"'$INSTANCE_ID'\",\"Timestamp\":\"'$DATE_TIME'\"}' > /tmp/log_metadata.json"

]

}

},

{

"action": "aws:runShellScript",

"name": "CheckAndInstallAWSCLI",

"inputs": {

"runCommand": [

"#!/bin/bash",

"if ! command -v aws &> /dev/null; then",

" echo 'AWS CLI not found, installing...'",

" if [ -f /etc/os-release ] && grep -qi 'amazon linux' /etc/os-release; then",

" yum install -y aws-cli",

" elif [ -f /etc/os-release ] && grep -qi 'ubuntu' /etc/os-release; then",

" apt update && apt install -y awscli",

" else",

" echo 'Unsupported Linux distribution. Exiting.'",

" exit 1",

" fi",

"else",

" echo 'AWS CLI already installed.'",

"fi"

]

}

},

{

"action": "aws:runShellScript",

"name": "ArchiveLogs",

"inputs": {

"runCommand": [

"#!/bin/bash",

"if [ -d /var/log ] && [ \"$(ls -A /var/log)\" ]; then",

" echo 'Archiving logs in /var/log...'",

" tar -czf /tmp/logs_$(jq -r '.Timestamp' /tmp/log_metadata.json).tar.gz -C /var/log . 2>/dev/null || true",

"else",

" echo 'No logs found in /var/log to archive.'",

" exit 1",

"fi"

]

}

},

{

"action": "aws:runShellScript",

"name": "UploadToS3",

"inputs": {

"runCommand": [

"#!/bin/bash",

"INSTANCE_ID=$(jq -r '.InstanceId' /tmp/log_metadata.json)",

"DATE_TIME=$(jq -r '.Timestamp' /tmp/log_metadata.json)",

"ARCHIVE_FILE=/tmp/logs_$DATE_TIME.tar.gz",

"if [ -f \"$ARCHIVE_FILE\" ]; then",

" echo 'Uploading archive to S3 bucket...'",

" aws s3 cp \"$ARCHIVE_FILE\" s3://{{S3BucketName}}/logs/$INSTANCE_ID/logs_$DATE_TIME.tar.gz",

"else",

" echo 'Archive file does not exist. Listing files in /tmp directory...'",

" ls -l /tmp/",

" exit 1",

"fi"

]

}

},

{

"action": "aws:runShellScript",

"name": "Cleanup",

"inputs": {

"runCommand": [

"#!/bin/bash",

"echo 'Cleaning up local archive...'",

"rm -f /tmp/logs_$(jq -r '.Timestamp' /tmp/log_metadata.json).tar.gz",

"rm -f /tmp/log_metadata.json"

]

}

}

]

}

Member discussion